Development of a sensor fusion method for AR-based surgical navigation

Current surgical navigation systems are mostly limited to displaying their results on external monitors in the vicinity of the patient, which forces the surgeon to switch between the displayed planning and the surgical site. Augmented reality (AR) devices reduce this problem by directly displaying the planning in the surgeon’s field of view.

The goal of this project was to show and evaluate the possibility of using AR for surgical navigation. The work focused on the implementation of a visual marker registration and pose estimation algorithm and the development of an inertial-optical sensor fusion approach.

Materials

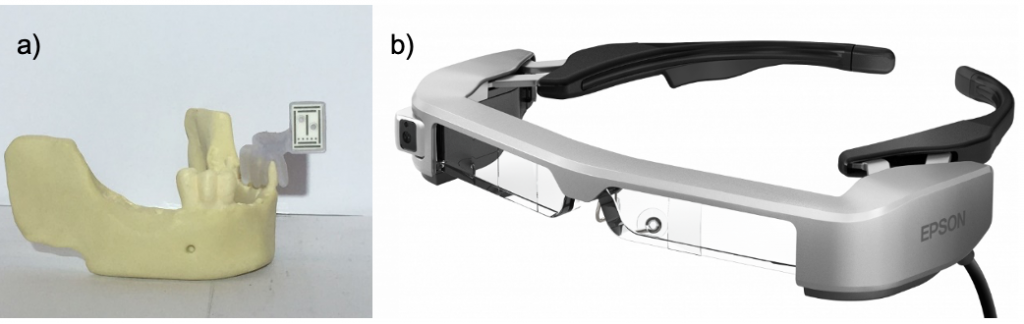

The work utilizes the Moverio BT-35E (EPSON) and the visual marker DENAMARK (mininavident AG). The BT-35E has a built-in camera and an inertial measurement unit (IMU). The proposed system is implemented in C++ on a Windows computer, which is connected to the AR-device.

Methods

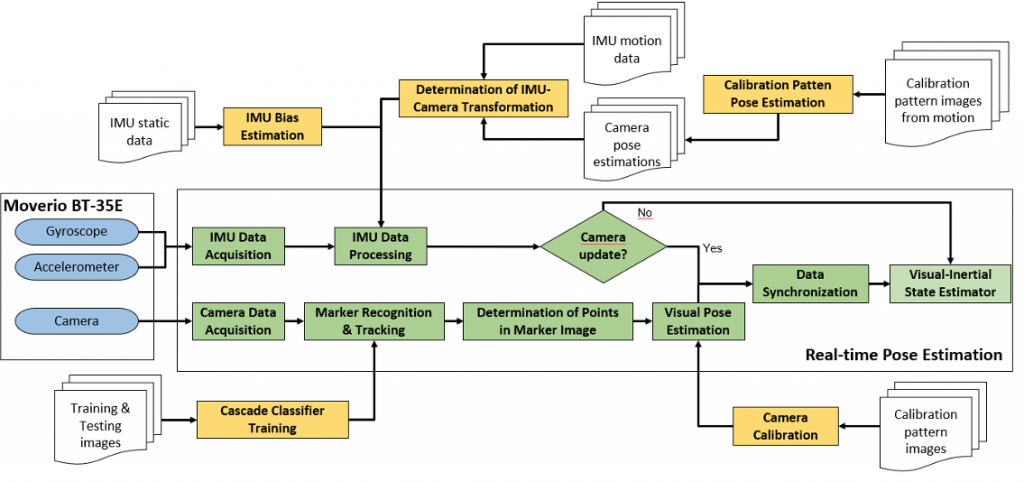

The overall software concept is given in the figure below. It can be divided into scripts for offline parameter calculations (yellow) and the live data processing (green).

The camera frames are searched for the visual marker using a classifier. The identified marker region is then used to find a set of characteristic points on the marker. The identified 2D points are then matched to their known 3D coordinates on the marker to estimate its pose. The method used relies on the Infinitesimal Plane-based Pose Estimation.

The acquired visual pose is corrected (or interpolated if the marker isn’t visible) using the measurements for angular velocity and acceleration provided by the BT-35Es IMU. A linear Kalman filter is used for the visual-inertial sensor fusion.

Results

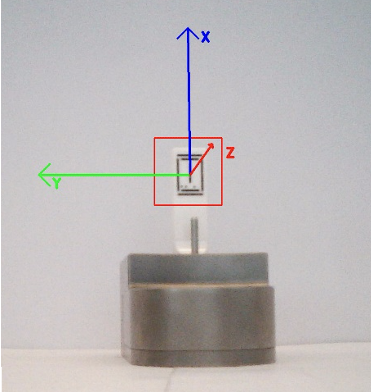

The results of the visual pose estimation are depicted in Figure 3. The red rectangle marks the marker region of interest found by the classifier; the coordinate frame is the reprojection of the found pose by the visual pose estimator.

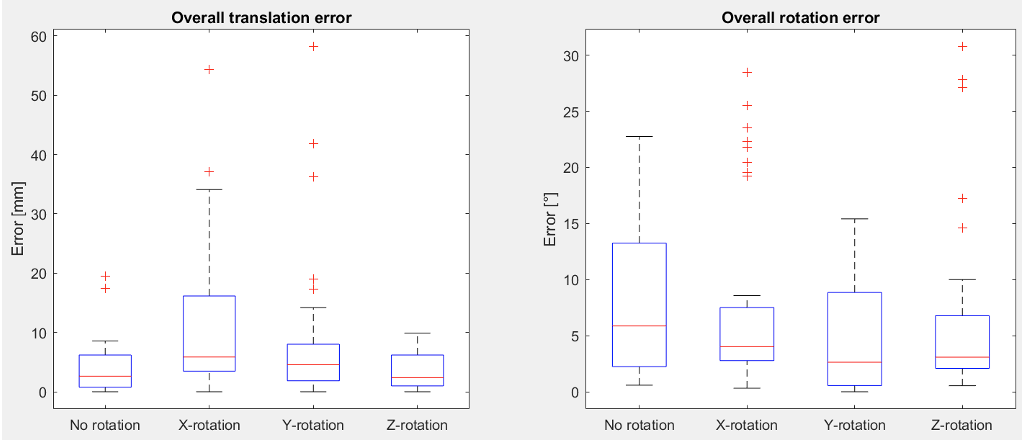

The visual pose estimation was validated using the Optotrak Certus. The marker was observed by the camera in four different positions (no rotation towards camera and x, y and z rotation of aprox. 30° toward the camera) and at multiple distances. The system had an overall median translation error of 3.69 mm (min. 0mm, max 58.27mm). The overall median rotation error was 3.56° (min. 0°, max. 30.8°).

The determined errors are not sufficient for surgical applications. It should be noted however that the algorithm presented by this work relies on only one camera whereas most systems from the literature utilize two cameras and use much bigger visual markers. The system provides an overall proof of concept for a visual pose estimator using the lightweight BT-35E and the DENAMARK marker.

Comments

No comment posted about Development of a sensor fusion method for AR-based surgical navigation